HOME

Exploring the interactions between sound, action and vision in robotics

In recent years, researchers have developed a growing amount of computational techniques to enable human-like capabilities in robots. Most techniques developed so far, however, merely focus on artificially reproducing the senses of vision and touch, disregarding other senses, such as auditory perception.

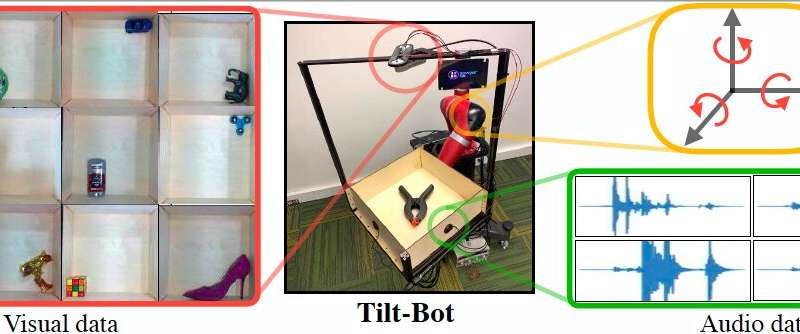

A research team at Carnegie Mellon University (CMU) have recently carried out a study exploring the possibility of using sound to develop robots with more advanced sensing capabilities. Their paper, published in Robotics: Science and Systems, introduces the largest sound-action-vision dataset compiled up to date—which was collected as a robotic platform called Tilt-Bot—and interacted with a wide variety of objects.

"In robot learning, we often only use visual inputs for perception, but humans have more sensory modalities than just vision," said Lerrel Pinto, one of the researchers who carried out the study, to TechXplore. "Sound is a key component of learning and understanding our physical environment. So, we asked the question: What can sound buy us in robotics? To answer this question, we created Tilt-Bot, a robot that can interact with objects and collect a large-scale audio-visual dataset of interactions."

Essentially, Tilt-Bot is a robotic tray that tilts objects until they hit one of the tray's walls. Pinto and his colleagues placed contact microphones on the robotic tray's walls to record the sounds produced when objects hit the wall and used an overhead camera to visually capture each object's movements.

News Source